On a May morning in 1920, a train pulled into town on the Kentucky–West Virginia border. Its passengers included a small army of armed private security guards, who had been dispatched to evict the families of striking workers at a nearby coal mine. Meeting them at the station were the local police chief—a Hatfield of the infamous Hatfield-McCoy feud—and several out-of-work miners with guns.

The private dicks and the local militia produced competing court orders. The street erupted in gunfire. When the smoke cleared, ten men lay dead—including two striking miners, the town mayor, and seven of the hired guns.

The striking miners had worked for the Stone Mountain Coal Company, in mines located outside the city limits of Matewan. There, they rented homes that were owned by their employer, shopped at a general store that was owned by their employer, and paid in a company-generated form of “cash” that could only be spent at that company store. When they joined a United Mine Workers organizing drive and struck for better pay, they were fired and blacklisted.

Without a union, a workplace can be a dictatorship. But what if your boss is also your landlord, your grocer, your bank, and your local police? That kind of 24/7 employer domination used to be a common practice before the labor movement and the New Deal order brought it to an end.

Today, however, the corporate assault on unions is leading to the return of the company town. These new company towns are dominated by one large business that owes no obligation to aid in the town’s well-being—quite the contrary, in fact. As was clear in this past summer’s failed UAW organizing drive at the Nissan plant in Canton, Mississippi, the ever-present threat that factory relocation poses to a one-company town bends the local power structure to the company’s will. That’s why so many of the newer large factories—like the auto and aerospace plants that have sprung up across the South in recent decades—are located in remote rural areas. That’s also one reason why organizing campaigns in those locales face very steep odds.

ALTHOUGH NEW ENGLAND clothing manufacturers experimented with company housing in the early 1800s, company towns really came into their own during the industrial revolution that followed the U.S. Civil War. They were common in industries where the work was necessarily physically remote, like coal mining and logging. The company simply owned all of the surrounding land and built cheap housing to rent to the workers they recruited. In new industries like steel production, factories were built in areas where land was cheap, and the companies bought lots of it. By constructing housing on the extra land, the companies found a great way to extract extra profit from their worker-tenants. Besides, a privately owned town enabled companies to keep union organizers away and to spy on potential union activity.

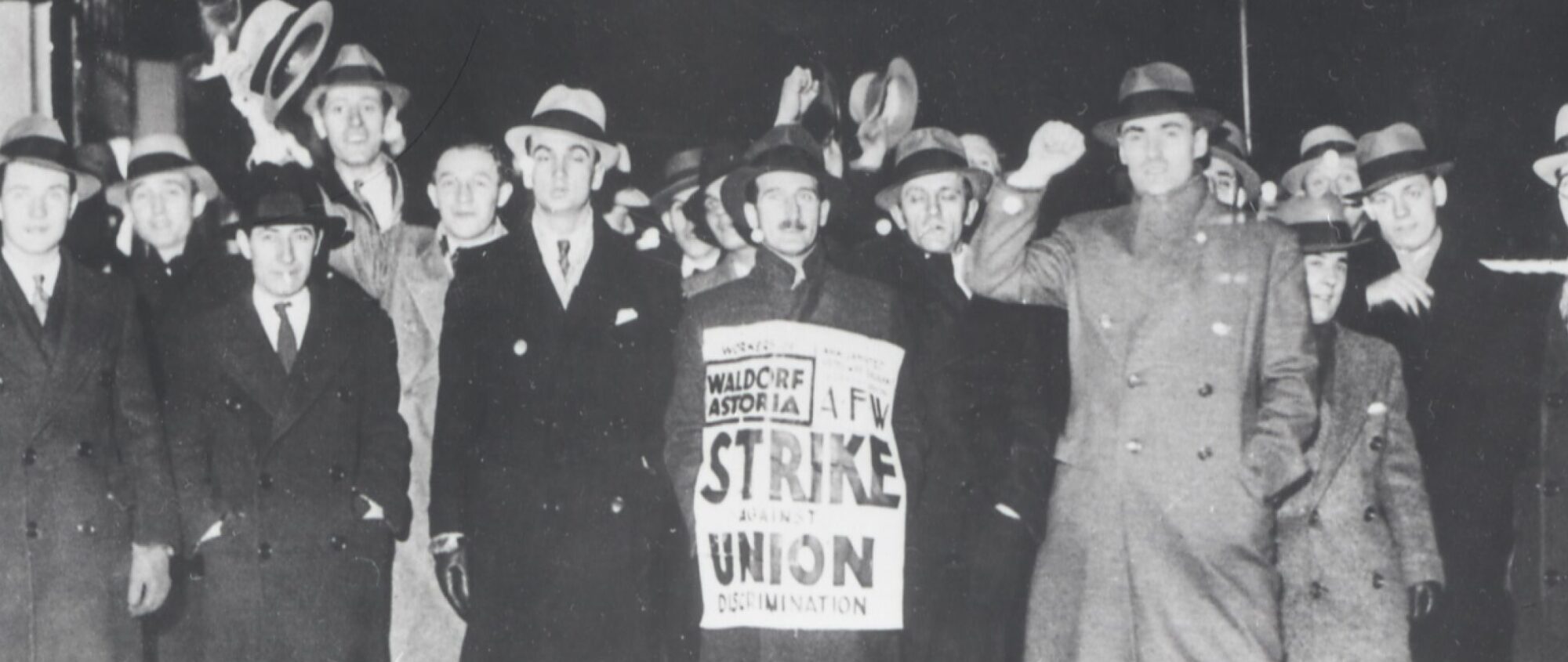

Some of the most infamous and bloody labor battles of the 19th century, like the Homestead strike and the Ludlow Massacre, were sparked by the violent eviction of striking workers from their company-owned housing.

Life was even more miserable for workers where the company-store system prevailed. Employers would own and operate a general store to sell the basic necessities to workers, with as much as a 20 percent markup. An 1881 Pennsylvania state investigation into union-buster and future-walking-head-wound Henry Clay Frick’s Coke Company found that the company cleared $160,000 in annual profit from its company store (that would be about $3.5 million today).

Some employers paid their employees in “company scrip,” a kind of I.O.U. that could only be exchanged for goods at the company store. A worker who was lucky enough to get paid in cash could be fired if he or she were caught bargain-hunting at an independent store in a neighboring town. And payday was often so meager and delayed that a worker might have to buy on credit, resulting in the kind of merry-go-round of debt and reduced-pay envelopes that is disturbingly similar to the practices of today’s “payday loans” predators. It’s not for nothing that the refrain of the classic song “Sixteen Tons” goes, “I owe my soul to the company store.”

The rise of unions and the New Deal order didn’t put an end to company towns per se, but companies that gave in to union recognition found less reason to own worker housing. However, companies that remained non-union—particularly in the South—continued to act as landlord, thereby instilling in their workers an additional layer of fear and oppression to keep unions out. Lane Windham’s excellent new book, Knocking on Labor’s Door: Union Organizing in the 1970’s and the Roots of a New Economic Divide, mentions—almost in passing—that one Amalgamated Clothing and Textile Workers Union organizing target, the textile giant Cannon Mills, continued to run a company town into the 1980s. When the company was purchased in a leveraged buy-out in 1982, the new owners quickly decided to sell the 2,000 houses it owned, giving workers 90 days to buy their homes or get out. The town—Kannapolis, North Carolina—finally incorporated and began electing its own city government after three-quarters of a century as a virtual dictatorship.

But Kannapolis’s conversion from a company town to a proper municipality only happened because an ailing firm in a globally competitive industry needed to sell off non-essential assets, and saw little need to be financially tethered to a community. The plant closed its doors for good in 2003, causing the largest mass layoff in North Carolina history.

Not all company towns were ramshackle developments. Some wealthy industrialists developed model company towns in misguided attempts at philanthropic social engineering.

George Pullman made a fortune building and leasing luxury sleeping cars to railroad companies. Pullman’s belief that the public would pay extra money for better-quality rail travel proved correct, and the Pullman Palace Car Company quickly had a monopoly in a market of its own invention. Pullman’s pressing need for new factories to meet consumer demand coincided with his growing paternalistic concern about poverty, disease, and alcoholism in the country’s industrial cities.

The town of Pullman was built on an area south of Chicago, near the Indiana border, adjacent to the Calumet River and the Illinois Central Railroad line. The company already owned some land there, and purchased more to begin construction in 1880. The housing that Pullman built was of much higher quality than what was typically found in working-class neighborhoods in industrial cities. There was green space and tree-lined streets. In the town center, he built a handsome and well-stocked library, a luxury hotel with the town’s only licensed bar, and a grand theater to feature “only such [plays] as he could invite his family to enjoy with utmost propriety.” Casting a shadow over the town was the towering steeple of the massive Greenwood church.

There was no requirement that Pullman’s factory workers reside in his town, and many commuted from Chicago and neighboring villages. But 12,600 Pullman employees did choose to live in his city by 1893. Some were supervisors and social climbers. Many more were young workers who wanted to raise their families in a new, clean environment. By the mid-1880s, the town was gaining a reputation as “the world’s healthiest city” for its low death rate.

Pullman’s undoing was his tendency to run his town like his business. As with his sleeping cars, he owned all the property and leased them to residents. His one giant church was too expensive for most congregations to afford its rent, and his ill-conceived attempt to convince all of the local denominations to merge into one generic mega-church failed. His library charged a membership fee to foster his notion of personal responsibility. Workers avoided the hotel bar and the watchful eye of “off-duty” supervisors, limiting their public carousing to a neighboring village colloquially known as “bum town.”

Pullman’s business sense led him to make a confounding choice for a civic father who was trying to instill middle-class values in his city: The housing, too, was for rent only. His aim was to ensure that housing remained in good repair and attractive, and he charged higher rents to maintain them. Here, Pullman applied his usual belief that the public would pay more for higher quality, ignoring the fact that this particular public—his employees—had little choice when his was the only housing in town.

The Panic of 1893, and the severe economic downturn that followed, presented Pullman with a dilemma. His business slowed to a near halt. Any capitalist who did not also feel responsible for running a city would simply have laid off all but a skeleton crew of workers. In a more traditional company town, the laid-off workers would have been violently evicted by Pinkertons or the local police. The Pullman company reduced its workers’ hours but kept everyone employed on a reduced payroll. Crucially, however, the Pullman Land Trust did not reduce rents, plunging the town’s residents into financial crisis. Many workers fell behind on their rent. Their debt to Pullman had the effect of restricting their freedom to quit. It provoked a strike at the factory.

The strike was soon joined by a nationwide boycott backed by the new American Railway Union (ARU), which was led by Eugene V. Debs. Rail transportation around the country ground to a stop as members of the new industrial union refused to move trains that carried Pullman sleeping cars. The strike was violently crushed by the National Guard and its leaders were jailed. (Debs later said of the experience, “in the gleam of every bayonet and the flash of every rifle the class struggle was revealed.” He emerged from jail a few years later as America’s most prominent socialist leader, calling the strike his “first practical lesson in Socialism.”)

George Pullman died in 1897, resentful of his reputation as a tyrant and of his model town’s ignominy.

Just a few years later, another bored plutocrat decided to build a model company town of his own. Friends cautioned Milton Hershey that Pullman had been a disaster for its owner. Warned that the town’s residents wouldn’t have elected George Pullman dogcatcher, Hershey responded, “I don’t like dogs that much.”

Hershey made his first fortune in caramel, and sold his confectionary for the unprecedented (for caramel, anyway) sum of $1 million in 1900. Although he retained rights to a small chocolate subsidiary, it was more of a local novelty. Prior to the advent of milk chocolate, the sweet was a luxurious treat for the wealthy that would not keep for long journeys by rail to allow for mass production and distribution

Then, like Pullman, Hershey became interested in solving the problems of modern industrial life. He founded the Hershey Chocolate Company to support his town—not vice versa. Hershey worked on a formula for milk chocolate that could be mass-produced, to provide his town with a sustainable industry.

Breaking ground in 1903, the town was located near its own source of dairy farms for his chocolate business. At the center of town was a 150-acre park, featuring a band shell, golf course, and zoo. After ten years, Hershey’s amusement park was receiving 100,000 visitors a year, making tourism a crucial second economic base for the model company town. Hershey built banks, department stores, and public schools. Unlike Pullman, homeownership was a key part of Hershey’s vision and business model.

In a case of history repeating itself, Hershey was rocked by a Congress of Industrial Organizations sit-down strike during the Great Depression. In 1937, 600 workers took control of the factory for five days. Their sit-down was broken up by scabs and angry local farmers who had watched 800,000 pounds of milk spoil each day. They broke into the factory, battering and forcibly removing the strikers.

Thanks to the New Deal order, which saw an activist federal government defending the rights of workers, however, a permanent union presence was eventually established at Hershey (although the company finagled to have its favored representative, a more conservative AFL union, win a collective-bargaining agreement).

The town of Hershey, though by no means the utopia that Milton Hershey envisioned, exists today as a modestly successful tourist trap. The theme park and the still-operating chocolate factory continue to serve as a job base for locals.

COMPANY TOWNS ARE STILL with us. In the 21st century, company towns operate less like Pullman and more like Kannapolis during the years between Cannon Mills’s sale of its company housing and the final closure of the mill. The companies no longer are their employees’ landlord, but because they’re the only major employer for miles around, they still wield extraordinary power.

This past August’s NLRB election defeat for Canton, Mississippi’s Nissan workers, who sought to be represented by the United Automobile Workers (UAW), should put unions on notice that company towns are not some relic from our sepia-toned past, but an essential feature of 21st-century manufacturing employment in the United States.

In 2003, Nissan, a French-owned multinational carmaker now valued at $41 billion, located its sole American auto assembly plant in the tiny town of Canton. The factory employs around 6,500 workers, while the town is home to roughly 13,000 residents.

In the run-up to the union election, Nissan did what almost every employer does. It didn’t threaten to fire union activists, because that would be too obviously illegal. Instead, management merely predicted that the invisible hand of the market would force it to shut down a newly unionized factory and ship all of the jobs out of town. Thusly terrorized, the entire political establishment of Canton, its churches, and the workers’ own neighbors amplified this threatening message to potential UAW supporters.

The company inundated the local airwaves with television ads in which a local pastor compared the ostensibly horrific period before Nissan arrived—when residents were “fluctuating back and forth looking for jobs”—with the good news that Nissan employees can “come through the door knowing the lights are on, the water is running.”

It actually makes sound business sense for multiple competing businesses in the same industry to be located in close physical proximity to each other. There are economies of scale that can be achieved through shared distribution channels, a major airport, a shared community of professional engineering talent, an education system designed to build the bench, and an ecosystem of parts suppliers and other complementary businesses.

It just doesn’t make business sense if you’re trying to operate on a union-free basis. The fact that Chrysler, GM, and Ford workers were friends and neighbors in Detroit and its suburbs helped organizers foster a culture of solidarity that was essential to organizing the auto industry in the 1930s and 1940s. The fact that few new auto factories, foreign or domestic, have been built anywhere near Detroit—or anywhere near each other—for more than half a century is not an accident. It’s not the result of “free trade,” of the tax-cutting “savvy” of Southern politicians, or of some inherent deficiency of the so-called Rust Belt.

It’s the product of a bloody-minded determination by “job creators” to avoid the conditions under which unions are even possible. From the overuse of “independent contractors” to sub-contracting and outsourcing, to locating new factories in small and remote geographies, corporations in America strategically structure their business to avoid the reach of NLRB-certified, enterprise-based collective bargaining.

These business practices make it clear that employers will continue to evade and sabotage any system of labor rights that is tied to individual workplaces, rather than one that applies to entire industries. We will need new labor laws and new models of worker representation to democratize our communities.

[This article originally appeared at the American Prospect.]